University of Washington Department Connectivity

Wherein an arbitrary metric is used to demonstrate what we all know to be true.

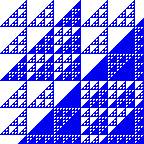

This is a force-directed graph of the departments of the UW, constructed such that the attraction 1 between two departments (D1 and D2) is proportional to the sum of the number of prerequisite courses in D2 required for every course in D1 and vice verse (ie. prerequisites in D1 for a course in D2). The area of each department is proportional to the number of courses offered, and departments are colored by the type of credits they most commonly offer 2. Click and drag departments in order to interact with the graph.

Note the general arrangement of the graph: the mathematics department, a large golden circle, is at the center of the densest area of the graph, surrounded by various engineering and hard science departments, which are themselves bordered by the softer sciences and humanities. Finally, the edges of the graph are occupied by a nebula of unconnected departments that eschew interdepartmental prerequisites. This is, in general, what one expects a university to look like.

The data-set used to construct this graph is the result of an attempt to build a better course catalog/academic planning system for the UW: I figured that the most elegant way to select my freshman-year courses would involve applying some graph algorithm to the digraph formed by the university’s courses and their prerequisites. Solving for possible academic plans is actually a pretty interesting problem involving starting from a given set of reachable nodes (courses with satisfied prerequisites) and finding a subgraph of the entire course-graph that satisfies a set of constraints (major requirements, desired courses, credits per semester), and minimizes a cost-function (time to graduate, number of courses to take). I was quickly distracted by some other interesting problem, but not before I’d downloaded the UW course catalog, parsed all of the entries (include horrid stuff like either 2.0 in MATH 136, 2.0 in MATH 308, or 2.0 in MATH 318; 2.0 in MATH 324

- mostly successfully), and thrown all of the data into Riak 3. The end result of all of that work is that I have a nice kev/value store containing about 11,000 json blobs of approximately the following format:

{

"area": [

"NW"

],

"name": "CSE 143",

"description": "Continuation of CSE142",

"title": "Computer Programming II",

"credits": {

"max": 5,

"min_per": 5,

"max_per": 5

},

"dependencies": {

"raw": "Prerequisite: CSE142.",

"all_courses": ["CSE142"],

},

"offered": "AWSpS"

}As Riak is very much not a traditional SQL database, calculating the connectivity metric between departments takes a little more thought than typing up the necessary SQL queries. In particular, all queries other than key retrieval (including ranges of keys, with secondary indexes) or full text search must be implemented as a map-reduce job distributed over multiple database nodes. Riak further complicates this by offering an odd choice of languages in which to write said map-reduce job: either JavaScript (poorly designed, weakly typed, imperative) or Erlang (better than JavaScript but flawed in its own ways 4, obscure, strongly typed, functional). Fortunately, the necessary code for such a simple metric isn’t horridly complicated:

map_phase(Obj,_,_) ->

%% Deserialize the JSON-encoded course description to the native erlang term:

Blob = jiffy:decode( riak_object:get_value(Obj)),

%% Extract the current department - the model module is just a bunch of accessors

%% that simplify traversing the term.

Department = strip_name( model:name(Blob)),

%% Generate a dict counting all external dependencies:

Dependencies = sets:fold(

fun (Course,Dict) ->

DependsUpon = strip_name(Course),

if

DependsUpon /= Department ->

dict:update_counter(DependsUpon,1,Dict);

true ->

Dict

end

end, dict:new(), model:all_courses( model:dependencies(Blob))

),

%% We also want to extract the area in which this department most often gives credit

Areas = dict:from_list([{Area,1} || Area <- model:area(Blob)]),

%% As this is only the map pass, we need to return a structure that lets us merge

%% the information from multiple courses in a simple department:

[dict:from_list([{Department,{Dependencies,Areas,1}}])].

%% The reduce phase is very simple - effectively the (Haskell) mconcat function

%% for something that's obviously a monoid.

merge_sum(Left,Right)-> dict:merge(fun (_,X,Y) -> X + Y end, Left,Right).

reduce_phase([],_)-> [];

reduce_phase([X|Rest],Const) -> reduce_phase(X,Rest,Const).

reduce_phase(X,[],_Const) -> [X];

reduce_phase(X,[Y|Rest],Const) ->

reduce_phase(dict:merge(fun (_Department, {LDeps,LAreas,LSize},{RDeps,RAreas,RSize}) ->

{merge_sum(LDeps, RDeps),

merge_sum(LAreas, RAreas),

LSize + RSize

}

end, X, Y)

, Rest, Const).d3 uses constraint relaxation, so the code actually implements this by setting the

desired

distance between two nodes inversely proportional to the number of prerequisites. However, the explanation in terms of attraction is conceptually simpler and accurate enough.↩The data for color is incomplete and dubious, but correct enough to offer a good sense of the humanities/not-humanities divide.↩

Why Riak? Mainly because of Bryan O’Sullivan’s wonderful work with Haskell’s JSON support and the relatively simple API for the Haskell Riak client library. If your data can be forced to behave something like a conflict-free replicated data types (read that paper!), storing it as a JSON blob in Riak is easier than using any other Haskell database interface. Riak also has a number of advantageous properties (eg. extreme fault tolerance) that make it an interesting choice for any application with a query pattern that matches Riak’s model.↩

In no particular order, things I can’t stand about Erlang:

The default string type is just a list of integers - Haskell also made this mistake, but now has ByteStrings and Text. Erlang just has a

binary

type roughly corresponding to Haskell’s ByteStrings with a little support for various character encodings. Unfortunately, all the common string manipulation functions are in the string module, which requires the list-of-integers string type.The

functional

part of Erlang just feels clunky coming from a Haskell background. I should be able to pass around composed functions with no performance hit, operators should be first-class, lots of parentheses should be avoidable with ($), libraries are missing important functions, parameters are in the wrong order, etc…Erlang is dynamically typed. I can’t write code in dynamically typed languages without making lots of silly errors. Erlang does have dialyzer, a very interesting static analysis tool that serves as a type checker, but it would be nice if the compiler would force my code to type check… I miss the ability to construct meaningful types - support for sum types (without the

tag atom

hack) and real record types (not just tuples and macros) would be helpful.

In short, Erlang is great for some domains (cool pattern matching! Message passing is great! OTP is amazing!), but Haskell beats it in the most important department for a language: building meaningful abstractions.↩